Undergraduate at Fudan University, majoring in statistics

Undergraduate at Fudan University, majoring in statisticsI am a senior undergraduate student majoring in Statistics at Fudan University.

I am currently advised by Prof. Peng Li in THUNLP, where I have been involved in several exciting research projects. Over the past two years, I have also been fortunate to work under the guidance of Prof. Qi Zhang and Prof. Tao Gui at Fudan NLP.

I am working with Prof. Yiwei Wang at the UCM NLP. I'm also glad to join Shanghai AI Lab as a research intern.

Broadly, my research interests lie in 3D vision, multimodal perception, understanding and generation, VLM/VLA and NLP applications. In the coming year, I plan to explore more interdisciplinary directions and collaborate closely with diverse research groups.

I am currently seeking Ph.D. opportunities for 2027 Fall.

Action required

Problem: The current root path of this site is "baseurl ("/academic-homepage") configured in _config.yml.

Solution: Please set the

baseurl in _config.yml to "Education

-

Fudan UniversityB.S. in StatisticsSep. 2022 - Now

Fudan UniversityB.S. in StatisticsSep. 2022 - Now

Experience

-

Fudan NLP LabVisiting StudentNov. 2022 - Apr. 2024

Fudan NLP LabVisiting StudentNov. 2022 - Apr. 2024 -

Tsinghua NLP LabVisiting StudentMay. 2024 - Now

Tsinghua NLP LabVisiting StudentMay. 2024 - Now -

Shanghai AI LabInternJan. 2026 - Now

Shanghai AI LabInternJan. 2026 - Now -

UCM NLP LabVisiting StudentJan. 2026 - Now

UCM NLP LabVisiting StudentJan. 2026 - Now

News

Selected Publications (view all )

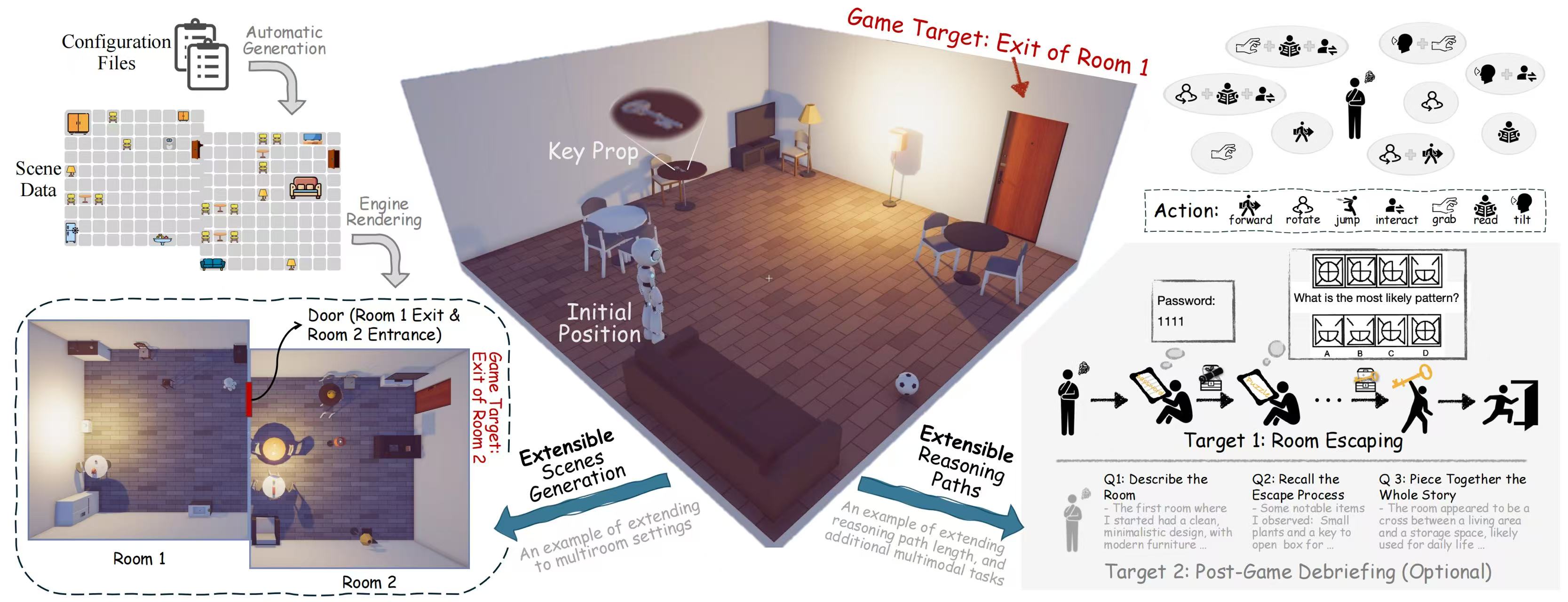

How Do Multimodal Large Language Models Handle Complex Multimodal Reasoning? Placing Them in An Extensible Escape Game

Ziyue Wang*, Yurui Dong*, Fuwen Luo, Minyuan Ruan, Zhili Cheng, Chi Chen, Peng Li#, Yang Liu# (* equal contribution, # corresponding author)

International Conference on Computer Vision (ICCV) Poster. 2025 ICCV 2025

The rapid advancing of Multimodal Large Language Models (MLLMs) has spurred interest in complex multimodal reasoning tasks in the real-world and virtual environment, which require coordinating multiple abilities, including visual perception, visual reasoning, spatial awareness, and target deduction. However, existing evaluations primarily assess the final task completion, often degrading assessments to isolated abilities such as visual grounding and visual question answering. Less attention is given to comprehensively and quantitatively analyzing reasoning process in multimodal environments, which is crucial for understanding model behaviors and underlying reasoning mechanisms beyond merely task success. To address this, we introduce MM-Escape, an extensible benchmark for investigating multimodal reasoning, inspired by real-world escape games. MM-Escape emphasizes intermediate model behaviors alongside final task completion. To achieve this, we develop EscapeCraft, a customizable and open environment that enables models to engage in free-form exploration for assessing multimodal reasoning. Extensive experiments show that MLLMs, regardless of scale, can successfully complete the simplest room escape tasks, with some exhibiting human-like exploration strategies. Yet, performance dramatically drops as task difficulty increases. Moreover, we observe that performance bottlenecks vary across models, revealing distinct failure modes and limitations in their multimodal reasoning abilities, such as repetitive trajectories without adaptive exploration, getting stuck in corners due to poor visual spatial awareness, and ineffective use of acquired props, such as the key. We hope our work sheds light on new challenges in multimodal reasoning, and uncovers potential improvements in MLLMs capabilities.

Controllable Emotion Generation with Emotion Vectors

Yurui Dong*, Luozhijie Jin*, Yao Yang, Bingjie Lu, Jiaxi Yang#, Zhi Liu# (* equal contribution, # corresponding author)

Under Review Preprint 2025 Arxiv

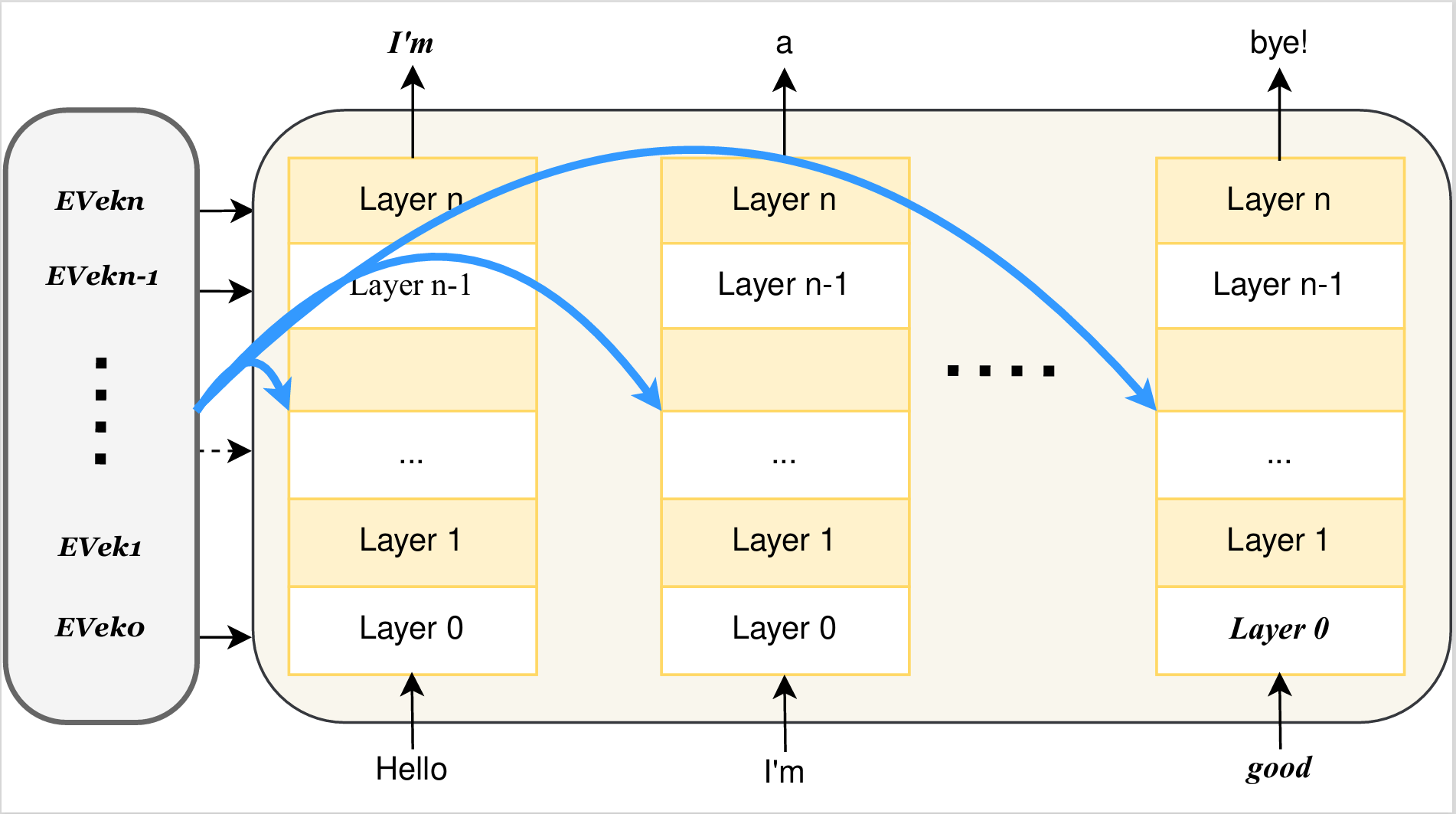

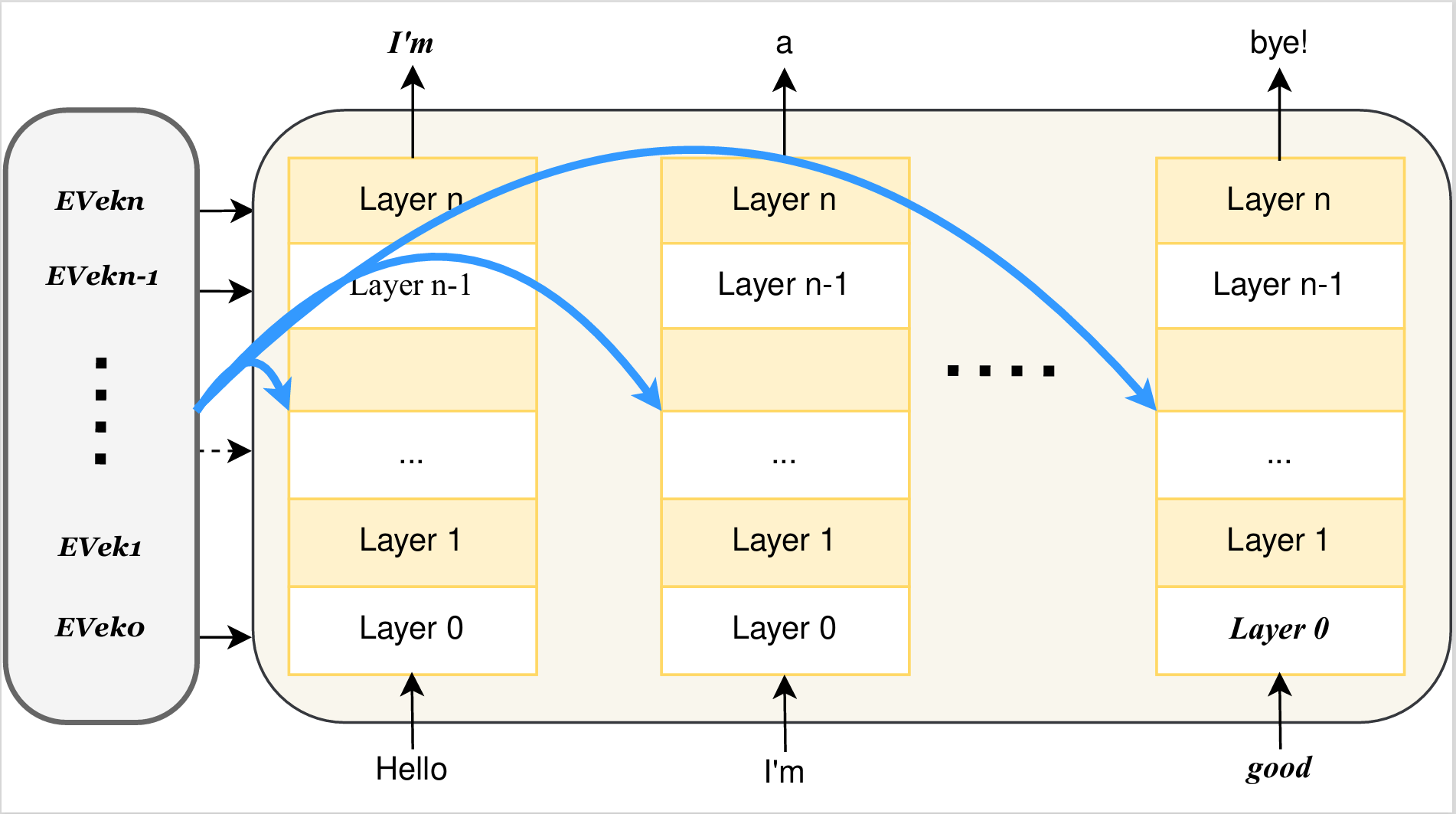

In recent years, technologies based on large-scale language models (LLMs) have made remarkable progress in many fields, especially in customer service, content creation, and embodied intelligence, showing broad application potential. However, The LLM's ability to express emotions with proper tone, timing, and in both direct and indirect forms is still insufficient but significant. Few works have studied on how to build the controlable emotional expression capability of LLMs. In this work, we propose a method for emotion expression output by LLMs, which is universal, highly flexible, and well controllable proved with the extensive experiments and verifications. This method has broad application prospects in fields involving emotions output by LLMs, such as intelligent customer service, literary creation, and home companion robots. The extensive experiments on various LLMs with different model-scales and architectures prove the versatility and the effectiveness of the proposed method.